“Digital transformation” got popular faster than it got precise, so it’s used to describe everything from “move to cloud” to “launch a new app” to “replace SAP” to “start using AI.” And lately, we are hearing more about the fatigue than the success.

Digital transformation fatigue isn’t a “change management” problem. It’s what happens when leaders increase the volume and disruption of change without:

- Increasing the organization’s ability to absorb that change.

- Producing outcomes that justify the cost.

Teams stay busy, stakeholders stop believing, adoption stalls, and the transformation narrative quietly turns into a punchline.

But the fix isn’t motivational. It’s architectural.

In this piece, we’ll redefine digital transformation in a way that can’t be reduced to “adopting tools,” then break down the six failure mechanisms that create fatigue by design, including what changes when you’re operating in OT-heavy environments where uptime and safety rewrite the rules. Finally, you’ll get a quick diagnostic checklist to assess if you are accidentally manufacturing fatigue, plus a 90-day reset plan that restores credibility without pausing transformation entirely.

TL;DR

- Digital transformation is not “adopting tools.” It’s redesigning the value-creation system (customer journeys, operating model, and control systems) so outcomes are produced by software, data, and automation at scale.

- Transformation fatigue isn’t a morale issue. It’s a systemic failure mode: change load rises, but outcome proof and absorption capacity don’t.

- Most fatigue is manufactured by six leader-driven mechanisms: initiative overload, output obsession, tech-first sequencing, operating model mismatch, ownership fog, and underfunded change absorption.

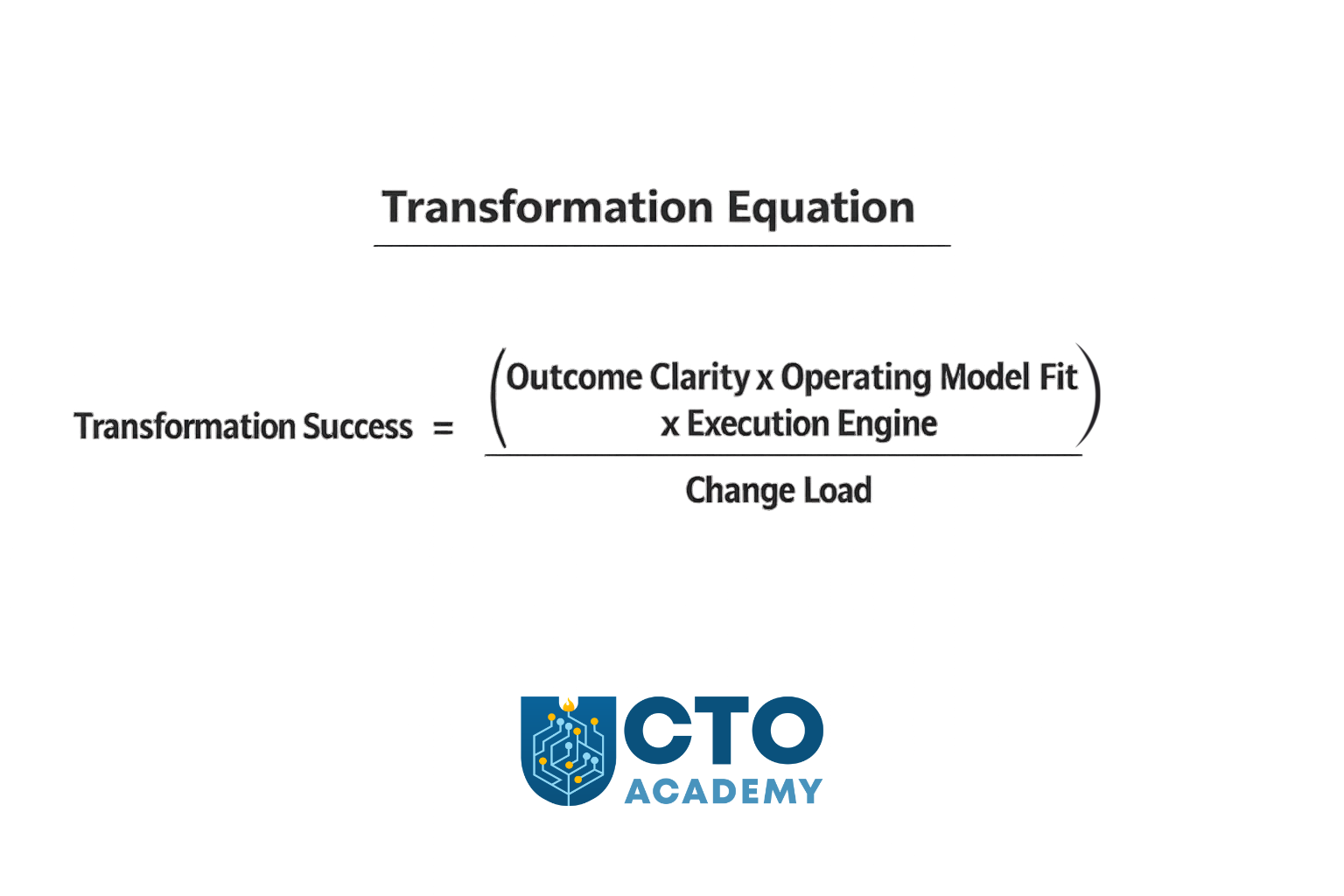

- Use the Transformation Equation to govern decisions:

Success = (Outcome Clarity × Operating Model Fit × Execution Engine) ÷ Change Load. If the denominator grows faster than the numerator, fatigue becomes inevitable. - Run transformation as three portfolios with WIP caps: Run Better (reliability/security/cost), Change the Business (platform/data/automation), Grow the Business (revenue/conversion/retention). Govern them differently.

- Add the missing governance layer: an Absorption Budget (quarterly cap on how many teams/functions you can disrupt) + an absorption plan for any initiative you want to scale (training, workflow redesign, adoption KPIs, rollback, operational impact).

- IT vs OT: same failure mechanisms, but OT has harsher penalties (uptime/safety constraints, site variability, constrained change windows). Treating OT like “IT in factories” creates multi-year distrust.

- The practical fix is a 90-day reset: regain control (WIP caps + absorption budget), re-anchor to outcomes/ownership, then prove the new system with 1–2 lighthouse value streams.

NOTE: This tutorial is an extension of a Module-8 lecture on Digital Transformation, delivered by Sally Eaves, in CTO Academy’s Digital MBA for Technology Leaders.

Table of Contents

Let’s begin with the new CTO/CDO/CDTO/VP-grade definition of digital transformation that is harder to misuse/misunderstand than the commonly used (generic) one you can find in Google’s AI Overview:

The Correct Definition of Digital Transformation

Digital transformation is the deliberate redesign of a company’s value creation system—its customer journeys, operating model, and control systems—so it can deliver measurable outcomes (growth, speed, efficiency, resilience) through software, data, and automation at scale.

CTO Academy

In other words, it is a process of converting a business from “people moving work through tools” to “systems moving work through software, data, and automated decisioning,” with measurable impact on unit economics, time-to-value, and risk.

This pulls the concept away from “integrating technologies” and toward the core reason: compounding advantage (faster learning loops, lower marginal cost, better control).

What This Definition Changes (vs the generic one)

Most definitions of digital transformation start with “adopting digital technologies.” That’s the wrong starting point. Technology is an input. Transformation is an outcome. And the outcome is not “being more digital.”

When properly defined, digital transformation forces clarity on three things:

- Business reason for change

- Operating model required to execute it

- Proof that the change is working

Let’s break it down for better clarity.

1. It’s outcome-first, not technology-first

The generic definition people commonly use starts with “integration of digital technologies.” The correct one, on the other hand, starts with “redesign of value creation” and insists on measurable outcomes.

Translation:

If you can’t name the outcome, it’s not transformation — it’s modernization.

Therefore, a transformation is only a transformation if it changes at least one of:

- Unit economics (cost-to-serve, margin per unit)

- Cycle time (idea → production → outcome)

- Reliability/risk (incidents, auditability, security posture)

- Learning velocity (experiment cadence + decision quality)

TIP: The moment you define it this way, you can also define why it fails.

2. It makes the “operating model” non-optional

Most failures (and fatigue) happen when the org tries to bolt new tech onto the old operating model.

So the definition explicitly includes:

- Customer journeys/value streams.

- Operating model (decision rights, teams, funding, governance).

- Control systems (risk, compliance, security, observability).

3. It recognizes the “price of change”

Transformation is not what you build. It’s what the organization can absorb, adopt, and operationalize without breaking. If adoption and repeatability aren’t designed in, you’re creating fatigue by design.

Transformation fatigue is, therefore, the tax you pay when the change rate exceeds absorption capacity. So the definition implies a constraint: “at scale” means it has to be repeatable, governable, and adoptable — not just a pilot.

What Transformation is Not

You’ll avoid a lot of confusion by calling these out early:

- Not “cloud migration.” That’s one enabler, not the goal.

- Not “agile adoption.” Agile is a delivery method; transformation is a business redesign.

- Not “ERP replacement.” That might be necessary, but it’s rarely sufficient.

- Not “AI strategy.” AI can accelerate value if data/process foundations exist.

- Not “digitizing existing processes.” Sometimes you must delete steps, not automate them.

4 Key Aspects of the Digital Transformation Framework

As a leader responsible for the transformation, you want a clean framework that maps to execution:

- Value model redesign

What value are we optimizing: growth, cost-to-serve, time-to-market, resilience, compliance, safety? - Operating model redesign

How decisions get made, how teams are structured, how funding works, what gets measured. - Digital execution engine

Delivery capability: platform, data products, automation, CI/CD, reliability, security by design. - Change absorption capacity

Adoption, enablement, workload/WIP limits, communication, incentives, training.

TIP: That last one is the missing piece in most definitions, and it’s where fatigue lives.

The 4 Drives of the Framework

Instead of “efficiency/agility/experience/data-driven,” which are so broad they’re unfalsifiable, it is better to use “board-level proofs”:

- Cycle time compression

Time from idea → production → measurable impact goes down. - Marginal cost reduction

Cost-to-serve per customer/order/ticket goes down through automation. - Reliability and risk control

Fewer incidents, faster recovery, better auditability/security posture. - Learning velocity

Faster experimentation and decision-making using trustworthy data.

As you can see, these are measurable, and they map directly to portfolio decisions.

Rule of thumb:

If you can roll it back without affecting business performance, it wasn’t transformation.

Digitization vs Digitalization vs Transformation (a useful distinction)

Digitization converts analog into digital.

Digitalization applies digital tools to existing processes.

Both can matter, but they rarely change the business model or unit economics on their own.

Transformation is different: it changes how value is created and how reliably the organization can change itself.

What Are Companies Actually Buying (when they hire someone to “design and kick off digital transformation”)?

They’re buying a leader who can do four things, in this order:

- What value are we pursuing (growth, speed, cost, resilience)?

- Which parts of the business are in scope (customer acquisition, fulfillment, underwriting, supply chain, finance, HR)?

- What “capabilities” must exist at the end? (e.g., omnichannel pricing, real-time inventory visibility, self-serve onboarding, automated compliance evidence)

They want to see: a Transformation Thesis (1–2 pages) + a capability map that ties tech change to business outcomes.

2. Turn it into a portfolio, not a program

Transformation fails when it’s treated as one massive program with a single finish line. A better model is 3 portfolios running in parallel:

- Run Better: reliability, security, cost, operational excellence.

- Change the Business: process redesign, data/automation, platform upgrades.

- Grow the Business: new product lines, monetization, digital channels.

They want to see: a 12-18-month transformation portfolio with funding slices and measurable outcomes.

3. Build the “digital engine” (the organizational capability to change)

This is the part most execs underestimate. Tools don’t transform; operating systems do. And key components are:

- Product operating model (teams aligned to outcomes, not projects).

- Modern delivery (CI/CD, test automation, release discipline).

- Platform thinking (shared services, internal developer experience).

- Data as product (ownership, quality SLAs, governance that enables).

- Security integrated (threat modeling, access patterns, evidence automation).

They want to see: a target operating model + initial team topology (who owns what, how they interact).

4. De-risk execution through sequencing and proof

You don’t start with a 3-year plan. You start with 90 days of proof, then scale.

Deliverable: a 90-day launch plan that includes:

- 1–2 lighthouse initiatives tied to measurable business value.

- 1 platform/foundation initiative (enablement).

- 1 governance & metrics initiative (visibility + control).

What companies definitely do not want is digital transformation fatigue.

“Fatigue” as a Systemic Failure Mode, Not a Morale Issue

Fatigue is usually interpreted as “people don’t like change.” That’s too simplistic.

A more accurate framing for senior leaders is this:

Transformation fatigue is the cost of change without compounding outcomes. It spikes when leaders increase the volume and disruption of change without increasing the organization’s ability to absorb and operationalize it.

When that happens, the organization experiences constant upheaval and still can’t point to the value. And it’s usually a consequence of one or more failure mechanisms.

The Six Failure Mechanisms Leaders Accidentally Design In

These aren’t generic “reasons transformations fail.” They’re mechanisms, or patterns that consistently produce the same three outcomes:

- Stalled value

- Low adoption

- Organizational exhaustion

Now, most orgs have 1–2 primary failure modes; fix those first. Let’s explain these failure mechanisms in more detail.

#1: Initiative Overload (no WIP limits, no “stop doing”)

When transformation becomes an umbrella for everything, it becomes unfundable, unstaffable, and ungovernable.

The tell-tale sign is that the initiative list keeps growing, but nothing ever stops.

This creates constant context switching, fragile dependencies, and a permanent sense of urgency without any notable momentum.

The fastest way to correct this is to cap transformation work-in-progress and make stopping work a leadership responsibility.

#2: Output Obsession (shipping without outcome ownership)

Features ship. Platforms launch. Migrations complete. And the business still asks: “What changed?”

When success is defined by delivery artifacts, the organization loses the one thing that sustains belief: measurable outcomes.

So if you keep hearing: “We’re busy, and nothing improves,” this is it.

To fix it, ensure that every initiative has one outcome metric, one business owner, and one technology owner.

#3: Tech-first Sequencing (tools before value-stream redesign)

Cloud, ERP, data platform, AI — chosen as a strategy instead of being enablers of the strategy.

This typically creates friction because the operating model and workflows remain unchanged. You are basically adding new tools to old workflows, consequently causing higher cognitive load, slower execution, and more rework.

What you should do instead is start with value streams and design the minimal foundations required to move the metric.

#4: Operating Model Mismatch (modern work funded and governed like projects)

A product operating model can’t be executed through a project governance mindset. Platform work can’t survive quarterly “project justification.” Data ownership can’t be a committee.

If any of this is true, it creates handoffs, scope churn, slow decision-making, and fragile accountability.

To correct it, fund products/platforms as persistent capabilities with clear ownership and measurable SLAs.

#5: Ownership Fog (IT “delivers,” business “sponsors”)

Transformation fails when it’s treated as an IT program with business endorsement.

Without explicit decision rights and shared accountability, business units opt out. Delivery teams become service providers. Politics fills the vacuum. You end up with alignment theater, escalation culture, and local workarounds.

To prevent this from happening, or flip the table:

- Define decision rights.

- Create joint ownership.

- Make trade-offs visible.

#6: Change Absorption is Underfunded (enablement is an afterthought)

Training, comms, workflow redesign, and adoption measurement are treated as “nice to have.” However, it’s not a soft issue. It is an execution dependency. It creates low adoption, shadow processes, and the well-known postulate of “the new way is harder than the old way.”

The only way around it is to make enablement a first-class workstream with adoption KPIs.

The Transformation Equation (the model most programs are missing)

Most transformations fail because leaders treat execution as the primary problem. Execution matters, but it’s downstream of design.

The Transformation Equation:

Transformation Success = (Outcome Clarity × Operating Model Fit × Execution Engine) ÷ Change Load

- Outcome clarity: the metric you’re trying to move and how you’ll prove it

- Operating model fit: ownership, funding, decision rights, governance

- Execution engine: platform + delivery discipline + data/automation + security

- Change load: number of concurrent initiatives × disruption per team/function

Remember:

When change load rises faster than the numerator, fatigue becomes inevitable.

How to Use the Equation in Real Leadership Decisions

If you want this to be actionable, use it as a gating mechanism:

- If outcome clarity is weak → do not scale.

- If operating model fit is missing → do not add more initiatives.

- If the execution engine is immature → reduce change load before pushing AI or “enterprise platforms.”

How to Run Transformation Without Creating Fatigue

The Transformation Equation is the model. This section is the operating system.

If you want transformation to compound instead of exhaust the organization, you need two governance upgrades most programs skip:

- A portfolio structure that forces trade-offs.

- A quarterly absorption limit that keeps the change load realistic.

The Portfolio Triad: Run Better/Change the Business/Grow the Business

Most transformations fail because everything is treated as the same kind of work. It isn’t.

The smart and effective way of doing it is to run three portfolios in parallel — with explicit WIP caps — and govern them differently:

Portfolio 1: Run Better (reliability, security, cost-to-serve)

This is the portfolio that keeps the lights on while the business changes.

- Typical initiatives: SLOs, observability, security-by-design, cost optimization, resilience, and incident reduction.

- What “winning” looks like: fewer incidents, faster recovery, lower cost-to-serve, stronger control posture.

Portfolio 2: Change the Business (process redesign, platform, data/automation)

This is where operating model redesign meets enabling foundations.

- Typical initiatives: workflow redesign, platform enablement, data products, automation, integration patterns.

- What “winning” looks like: shorter cycle times, higher reuse, fewer handoffs, measurable adoption.

Portfolio 3: Grow the Business (digital revenue, conversion, retention)

This portfolio exists to move business metrics (not ship features).

- Typical initiatives: self-serve onboarding, pricing/packaging experiments, digital channel optimization, product-led growth loops, and personalization.

- What “winning” looks like: conversion lift, retention lift, revenue growth, CAC/LTV movement.

Important:

Each portfolio needs its own metric set and governance cadence. If you govern “Grow” like “Run Better,” you’ll slow it down. If you govern “Run Better” like “Grow,” you’ll destabilize operations.

Portfolio Triad KPIs and Governance Cadence (Table 1)

| Portfolio | Primary executive intent | Example KPIs (pick 3–5, don’t boil the ocean) | Governance cadence |

|---|---|---|---|

| Run Better (reliability, security, cost-to-serve) | Reduce operational drag and risk while freeing capacity | SLO attainment, incident rate & MTTR, change failure rate, security control coverage, cost-to-serve/unit cost, audit finding burn-down | Monthly risk + reliability review; weekly ops/health check for hotspots |

| Change the Business (process redesign, platform, data/automation) | Increase the organization’s ability to change safely and repeatedly | Lead time for change, deployment frequency (where relevant), % automated workflow steps, platform adoption (active usage), reuse rate, data quality SLAs, integration cycle time | Bi-weekly delivery review; monthly outcome + adoption review |

| Grow the Business (digital revenue, conversion, retention) | Move business metrics through digital channels/products | Conversion rate, retention/churn, revenue per user/account, CAC/LTV trend, activation rate, experiment throughput, funnel drop-off, NPS/CSAT (where applicable) | Weekly growth review (metrics + experiments); monthly strategic bets review |

Rule of thumb:

If you use the same cadence and success criteria for all three portfolios, you’ll either slow growth to a crawl and/or destabilize operations.

The Absorption Budget (the missing governance layer)

Transformation fatigue is what happens when the change load exceeds the organization’s capacity to absorb it. Most leaders don’t manage that capacity explicitly; they discover it after adoption stalls.

The Absorption Budget (definition)

CTO Academy

Every quarter, define the maximum number of teams/functions that can be meaningfully disrupted.

That number becomes your “absorption budget,” and it caps concurrent transformation load.

Require an absorption plan for every initiative

Before an initiative is approved to scale, it needs an absorption plan that answers:

- Training + comms: who needs to learn what, by when, and how will we support them?

- Workflow redesign: what will change in day-to-day work (not just in tooling)?

- Adoption KPI + rollback plan: how will we measure real adoption, and what happens if it fails?

- Operational impact assessment: what breaks if this change lands poorly? (especially in OT contexts)

The executive benefit of an absorption budget

It turns the transformation from “who can yell loudest gets priority” into a capacity-managed system:

- Fewer initiatives, higher throughput

- Faster time-to-first-win

- Higher adoption and less rework

- Lower burnout and attrition risk

IT vs OT: Same Failure Mechanisms, Different Penalties

Many executives treat OT transformation like “IT, but in factories.” That’s how you create multi-year distrust.

OT environments introduce constraints that change the failure profile:

- Availability and safety dominate.

- Site variability is real.

- Change windows are constrained.

- Legacy and vendor ecosystems are sticky.

The question everybody asks is: Where do IT leaders get OT transformations wrong?

If you do any of the following, expect pilot purgatory:

- Assuming a rollout template will scale across sites unchanged.

- Pushing IT security/change practices that OT operations can’t tolerate.

- Treating IT/OT collaboration as integration work instead of a shared operating model.

Bottom line, in IT, bad sequencing causes delays and cost overruns. In OT, on the other hand, bad sequencing can create downtime, safety exposure, and long-lived resistance to future change.

The 90-Day Fatigue Reset Plan (without pausing transformation)

Keep in mind that the following plan does not mean “slow down.” It means “reduce unabsorbed change and restore outcome credibility.”

The 90-Day Reset Plan

Days 1–15: Stop the bleeding

- Freeze net-new initiatives unless they replace something already in-flight.

- Publish a Stop/Start/Continue list at exec level (make trade-offs explicit).

- Stand up the Portfolio Triad (Run Better/Change/Grow) and assign owners.

- Set portfolio WIP caps (maximum concurrent initiatives per portfolio).

- Define your quarterly Absorption Budget (max teams/functions that can be disrupted).

Days 16–45: Re-anchor to outcomes and ownership

- For every initiative: one outcome metric, one business owner, one tech owner.

- Require an absorption plan to scale (training + workflow redesign + adoption KPI + rollback).

- Kill/merge anything that can’t meet this bar.

- Define decision rights and governance cadence (monthly outcomes, weekly delivery where needed).

Days 46–90: Prove the new system works

- Run 1–2 lighthouse value streams that must move a business metric in <6 months.

- Stand up enablement as a first-class workstream (training, comms, office hours, adoption dashboards).

- For OT: define rollout-by-site strategy + change windows + safety gating + operational impact assessment.

Remember:

Your goal in the first 15 days isn’t progress — it’s control.

What does a “lighthouse value stream” actually mean?

A lighthouse is not a pilot. It’s a cross-system value stream that forces alignment, proves the operating model, and moves a business metric in under six months, while producing reusable capabilities (data pipeline patterns, identity/access patterns, deployment discipline, observability, audit evidence).

A Quick Diagnostic: Are You Manufacturing Fatigue?

If three or more are true, you don’t have a “change management problem.” You have a transformation design problem.

Symptoms Checklist

- You have 10+ transformation initiatives live, and none have stopped.

- Success is measured by milestones, not business outcomes.

- Adoption is assumed, not measured.

- Digital is “owned by IT,” with the business sponsoring.

- Pilots exist, scaling doesn’t.

- OT rollouts are treated like IT rollouts.

- Enablement has no budget, no owner, and no KPIs.

Symptom-to-Mechanism Mapping (Table 2)

| Symptom you see in the org | Most likely failure mechanism | What leaders usually do (wrong) | First corrective action (high leverage) |

|---|---|---|---|

| “We’re running 10–20 initiatives, and everything is late.” | #1 Initiative overload | Keep adding programs to satisfy stakeholders | – Set portfolio WIP limits – Publish a Stop/Start/Continue list tied to capacity |

| “We shipped a lot…but the business asks what changed.” | #2 Output obsession | Report milestones, launches, and migrations as successes | – Require one outcome metric per initiative – Name one business owner + one tech owner |

| “Adoption is low. People go back to spreadsheets and email.” | #6 Underfunded absorption | Treat enablement as comms/training “later” | – Create an enablement workstream with adoption KPIs (active usage, process compliance, time-to-proficiency) |

| “Every initiative needs 6 approvals, and decisions take weeks.” | #5 Ownership fog (and operating model mismatch) | Create committees instead of decision rights | – Define decision rights (who decides/when/with what data) – Run a monthly outcomes review |

| “We’re modernizing platforms, but delivery speed isn’t improving.” | #4 Operating model mismatch | Fund product/platform work like projects; rotate teams | – Move funding to persistent teams (products/platforms) with measurable SLAs and clear ownership boundaries |

| “Cloud/ERP/data platform was the strategy. Value is ‘later.’” | #3 Tech-first sequencing | Start with the tool rollout, assume value will follow | – Pivot to value streams: pick 1–2 lighthouses and build only the minimal foundations needed to move a metric |

| “We have pilots everywhere, but nothing scales.” | #1 Overload + #3 Tech-first + #5 Ownership fog | Treat pilots as progress; avoid hard choices | – Enforce a pilot exit bar (adoption + metric movement + repeatable pattern) – Kill pilots that don’t qualify |

| “Teams are burning out; our best people are always ‘on transformation.’” | #1 Overload + #6 Absorption gap | Overload top talent; run transformation as extra work | – Create an absorption budget (max disruption per quarter) – Rebalance staffing so transformation isn’t “after hours” |

| “Data is ‘strategic’, but nobody trusts the numbers.” | #4 Operating model mismatch | Treat data as a platform project without ownership | – Move to data products: named owners, quality SLAs, and metrics tied to decisions (not dashboards) |

| “Security/compliance keeps blocking delivery.” | #5 Ownership fog (control systems not designed) | Handle risk late via gatekeeping | – Shift to built-in controls: threat modeling early, automated evidence, clear exception process with risk acceptance |

| “OT teams reject changes; sites diverge; rollout is chaotic.” | OT penalty on #3 and #6 | Apply IT rollout patterns to OT realities | – Roll out by site archetype, design change windows, and include operators in workflow redesign – Measure adoption and operational impact |

| “Incidents increased after releases; reliability is worse.” | #3 Tech-first + #4 Operating model mismatch | Optimize for shipping, underinvest in reliability | – Add SLOs/operability to the definition of done – Fund reliability as part of the transformation portfolio (“Run Better”) |

This is how you should use this table in leadership meetings:

- Pick the top 3 symptoms causing the most pain.

- Treat the matching mechanisms as your “primary failure mode.”

Remember, don’t just manage symptoms; remove the mechanism.

Key Takeaways

Your job isn’t to “drive digital transformation.” Your job is to redesign the system so outcomes are produced by software, data, and automation, without exceeding the organization’s capacity to absorb change.

If your organization is experiencing digital transformation fatigue, don’t start by asking how to persuade people to embrace change. Instead, start by asking a harder question: what have we designed that makes fatigue the rational response?

Fatigue isn’t random. It’s the predictable result of:

- Too much concurrent change.

- Success measured as output instead of outcome.

- New technology layered onto an old operating model.

- Unclear ownership and decision rights.

- An underfunded absorption engine (enablement, training, workflow redesign, adoption measurement).

Redefine transformation as what it actually is: a redesign of the value-creation system so outcomes are produced by software, data, and automation at scale. Then use the Transformation Equation as your governance model: increase outcome clarity, operating model fit, and execution capability, or reduce change load. That’s how you restore belief.

The goal isn’t less transformation. The goal is less unabsorbed transformation and more compounding outcomes.

When you get that right, fatigue stops being a morale crisis and becomes what it should be: an early warning indicator you know how to act on.

Frequently Asked Questions (FAQ)

What’s the simplest way to tell “transformation” from “modernization”?

If you can’t point to a measurable shift in unit economics, cycle time, reliability/risk, or learning velocity, it’s probably modernization. Transformation changes how value is created and proven—not just what systems you run.

Why does transformation fatigue happen even when teams are delivering a lot?

Because output is not an outcome. When leaders increase initiative volume and disruption but don’t increase outcome clarity and absorption capacity, the org experiences constant change without compounding results—so belief collapses.

Which failure mechanism creates the most damage most often?

Initiative overload is the multiplier. Too many concurrent initiatives drive context switching, dependency gridlock, and “permanent urgency,” which then worsens every other failure mechanism (output obsession, tech-first sequencing, adoption failure, etc.).

How do I reduce fatigue without “slowing down transformation”?

Don’t slow down—reduce unabsorbed change. Use portfolio WIP caps, define a quarterly Absorption Budget, and require an absorption plan (training, workflow redesign, adoption KPIs, rollback, operational impact) before scaling. This typically increases throughput by removing thrash.

What’s a practical “absorption plan” template?

At a minimum, it answers four questions:

1) Who needs to change behavior, and what do they need to learn? (training + comms)

2)What changes in day-to-day workflow beyond the tool/UI? (workflow redesign)

3) How will we measure real adoption, and what’s the rollback if it fails? (adoption KPI + rollback)

4) What breaks if this lands poorly (especially OT)? (operational impact assessment)

What’s the biggest difference between IT and OT transformation risk?

In OT, the system constraints are different: availability and safety dominate, sites vary, change windows are constrained, and legacy/vendor ecosystems are sticky. The same leadership mistakes (especially tech-first sequencing and underfunded absorption) carry higher penalties: downtime, safety exposure, and long-lived distrust.

How do I pick a “lighthouse value stream” that actually proves something?

A lighthouse isn’t a pilot. It must (a) be cross-system, (b) force alignment on ownership and decision rights, (c) move a business metric in <6 months, and (d) produce reusable capabilities (delivery discipline, data patterns, observability, audit evidence).

What should I do first if I suspect we’re “manufacturing fatigue”?

Start with control, not progress: freeze net-new work unless it replaces something, publish Stop/Start/Continue, stand up the Portfolio Triad, set WIP caps, and define the quarterly Absorption Budget. Then re-anchor every initiative to one outcome metric with one business and one tech owner.